When managing one or more Azure functions, I prefer to have centralized configuration management, especially in the context of a distributed system. This provides me a clear place where all configuration comes together. Azure App Configuration enables us to do this. However, when you change a configuration, it's convenient if applications apply the new values immediately without the need to restart the app. Setting up an Azure Function to achieve this is unfortunately not straightforward yet. In this post, I will show how I have solved this in a demo application.

Some details have been omitted for readability. If you feel something is missing, please check the Github repository where the final result can be found. Did this blog help you? Then don't forget to leave a star at the Github project.

Example application

I will start with a simple “hello world” application. There is one function present that works with an HttpTrigger. The output of the function is a piece of text. Initially, the output will be “Hello world”. I will replace 'world' with a name configured in Azure App Configuration later on.

using Microsoft.Azure.WebJobs.Extensions.Http;

namespace JuulHobert.Blog.FunctionAppWithAppConfig;

public class HelloWorld

{

[FunctionName("HelloWorld")]

public IActionResult Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", Route = "hello-world")]

HttpRequest req) => new ContentResult

{

Content = "Hello World!",

ContentType = "text/plain"

};

}

I can run this Azure Function locally, and when I do and open http://localhost:7071/hello-world in a browser, I see the text.

The fixed text Hello world is returned. The next step is to use a value from the app configuration and return it in the response.

App configuration

The next step is to add some libraries.

It is necessary to configure dependency injection to be able to receive the configuration in the constructor of the HelloWorld class. I do this by adding a Startup class. In addition, I also add support for feature flags. I will use this to put the new functionalities behind a feature flag. This allows me to apply trunk based development. This is a topic that deserves a separate blog. For now, it is sufficient to know that this allows us to turn functionalities on and off.

using System;

using Azure.Identity;

using JuulHobert.Blog.FunctionAppWithAppConfig;

using Microsoft.Azure.Functions.Extensions.DependencyInjection;

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.DependencyInjection;

using Microsoft.FeatureManagement;

[assembly: FunctionsStartup(typeof(Startup))]

namespace JuulHobert.Blog.FunctionAppWithAppConfig;

public class Startup : FunctionsStartup

{

private const string AppConfigEndpointEnvironmentVariableName = "AppConfigEndpoint";

public override void ConfigureAppConfiguration(IFunctionsConfigurationBuilder builder)

{

var credentials = new DefaultAzureCredential();

var appConfigEndpoint = Environment.GetEnvironmentVariable(AppConfigEndpointEnvironmentVariableName);

if (string.IsNullOrEmpty(appConfigEndpoint))

{

throw new InvalidOperationException("AppConfigEndpoint is not set");

}

builder.ConfigurationBuilder.AddAzureAppConfiguration(options =>

options

.Connect(new Uri(appConfigEndpoint), credentials)

.Select($"{ServiceOptions.SectionName}:*")

.ConfigureKeyVault(kv => kv.SetCredential(credentials))

.UseFeatureFlags()

.ConfigureRefresh(x => x.Register("JuulHobertBlog:Name", refreshAll: true)));

}

public override void Configure(IFunctionsHostBuilder builder)

{

builder.Services

.AddAzureAppConfiguration()

.AddFeatureManagement()

.Services

.AddOptions<ServiceOptions>()

.Configure<IConfiguration>((settings, configuration) =>

{

configuration.GetSection(ServiceOptions.SectionName).Bind(settings);

});

}

}

public class ServiceOptions

{

public const string SectionName = "JuulHobertBlog";

public string Name { get; set; } = string.Empty;

}

I read the environment variable AppConfigEndpoint in the Startup class and use managed identity for authorization. In my code, I use DefaultAzureCredential, which tries different underlying credentials to gain access. I ensure that on my local machine, I am logged in with the command az login. The Azure Function deployed in Azure will use managed identity. Managed identity ensures that I no longer have to worry about securely storing credentials, as Azure now takes care of this for me.

It's time to modify the HelloWorld class. I'm going to return the configured name with the help of app configuration. I will put this new functionality behind a feature flag so that the change can be turned on and off.

using System.Threading.Tasks;

using Microsoft.AspNetCore.Http;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.Extensions.Options;

using Microsoft.FeatureManagement;

namespace JuulHobert.Blog.FunctionAppWithAppConfig;

public class HelloWorld

{

private const string FeatureConfigName = "ConfigName";

private readonly IOptions<ServiceOptions> _options;

private readonly IFeatureManager _featureManager;

public HelloWorld(

IOptionsSnapshot<ServiceOptions> options,

IFeatureManager featureManager)

{

_options = options;

_featureManager = featureManager;

}

[FunctionName("HelloWorld")]

public async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", Route = "hello-world")]

HttpRequest req)

{

var featureEnabled = await _featureManager.IsEnabledAsync(FeatureConfigName);

var content = featureEnabled ? $"Hello {_options.Value.Name}" : "Hello World!";

return new ContentResult

{

Content = content, ContentType = "text/plain"

};

}

}

If I try to start the Azure Function locally now, it doesn't work anymore. I get the error shown below.

Error building configuration in an external startup class. JuulHobert.Blog.FunctionAppWithAppConfig: AppConfigEndpoint is not set.

First, I need to create an app configuration and set the environment variable.

Create an App configuration manually

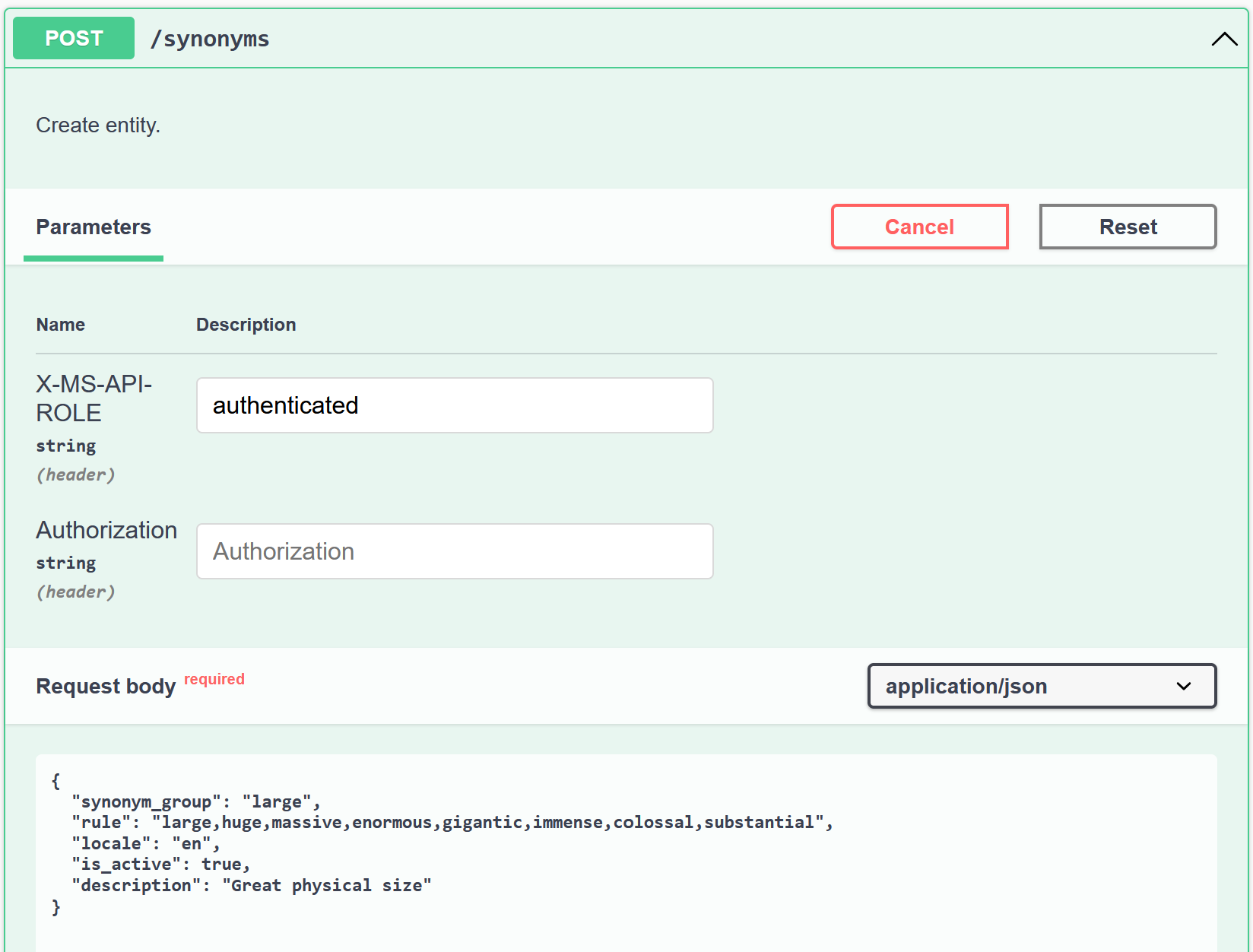

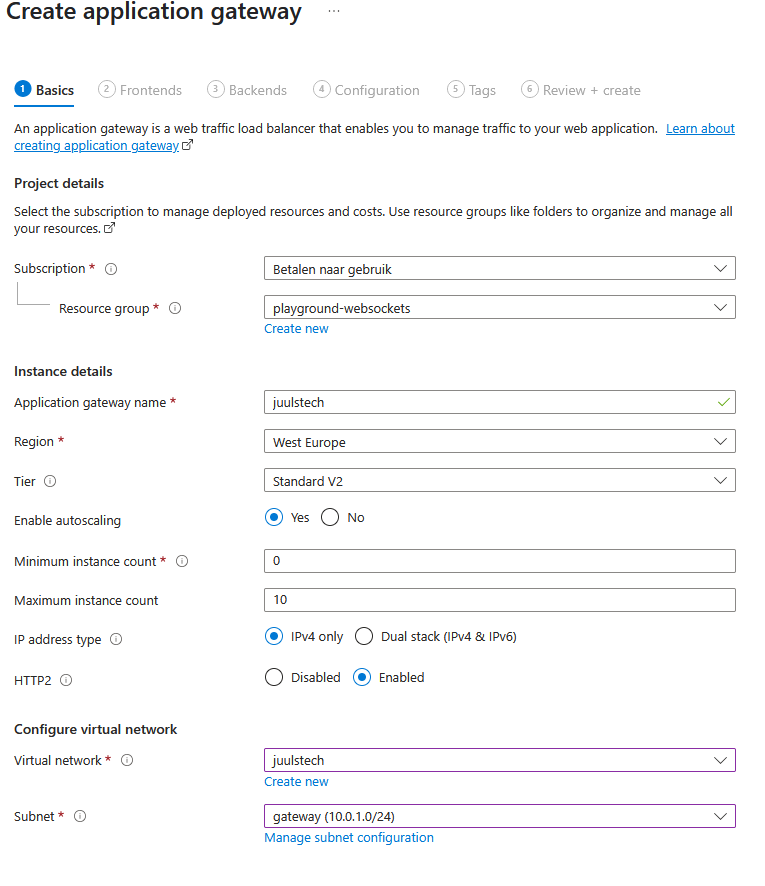

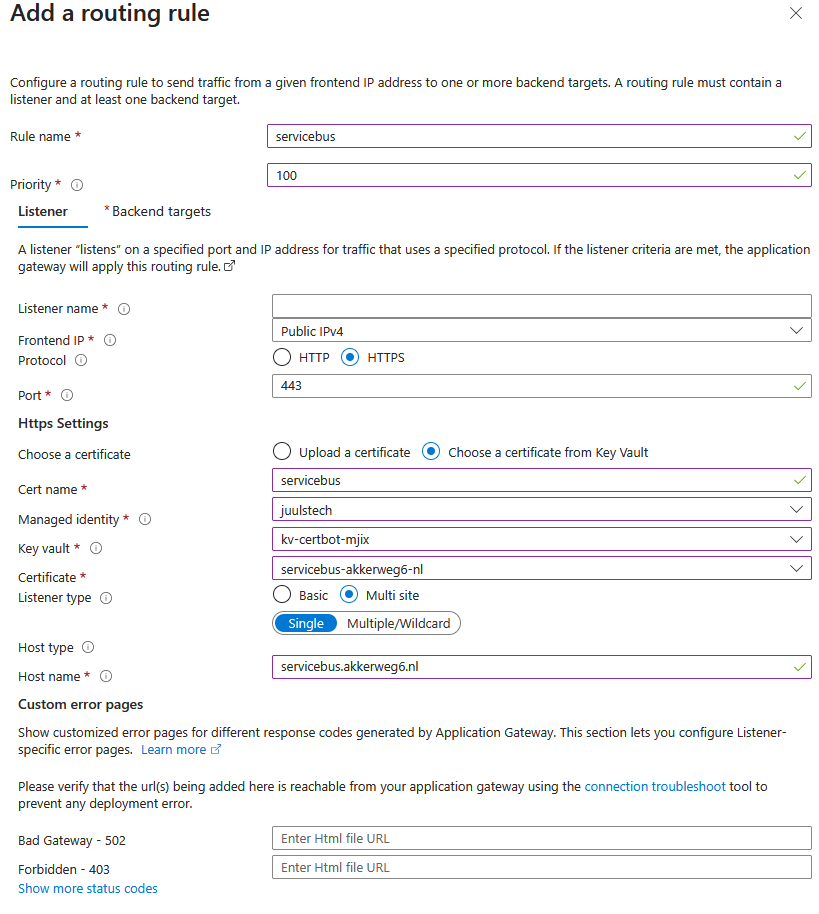

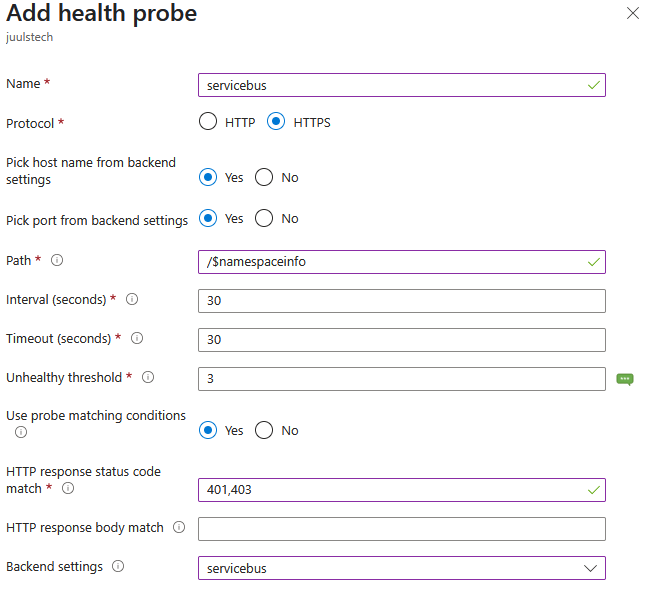

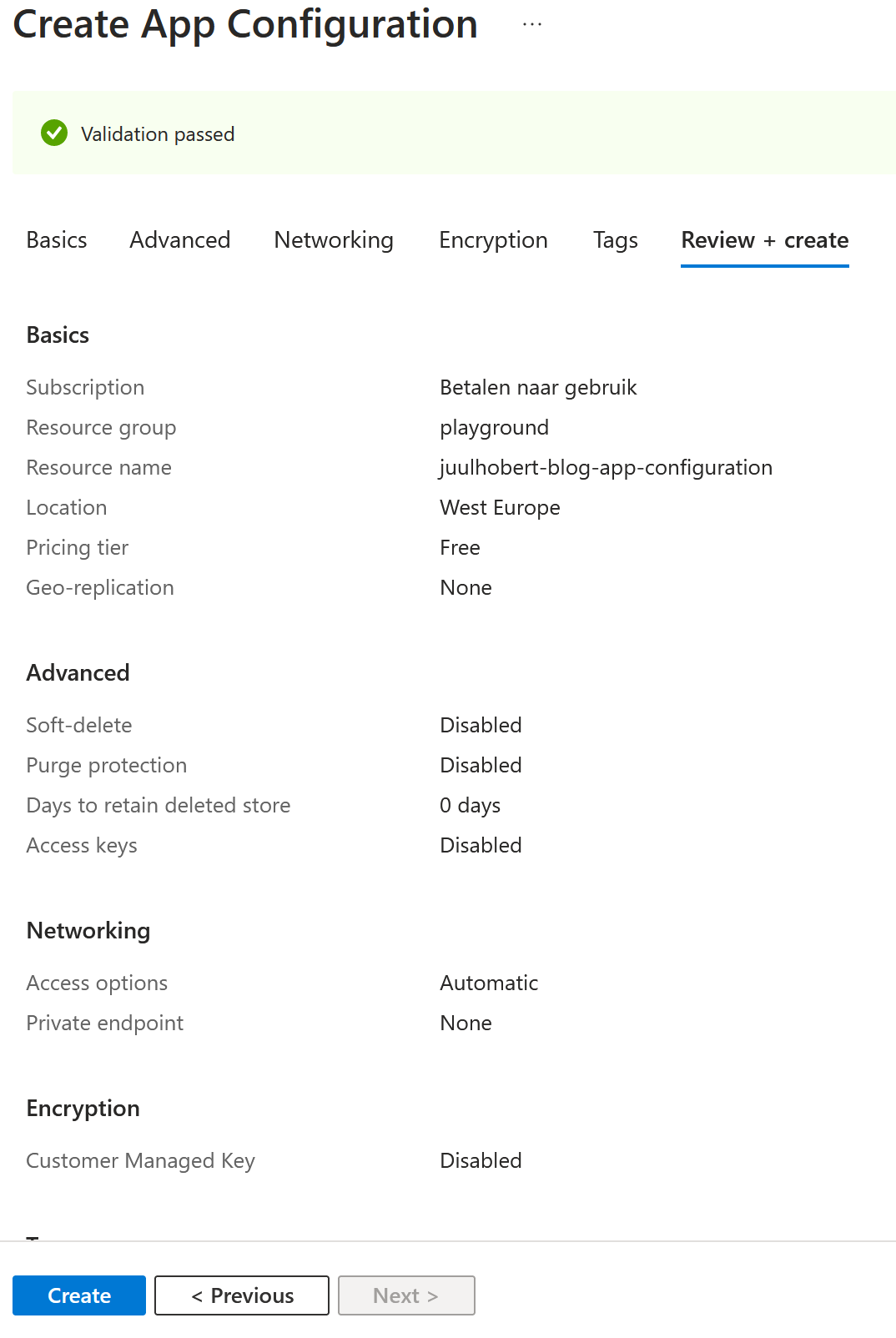

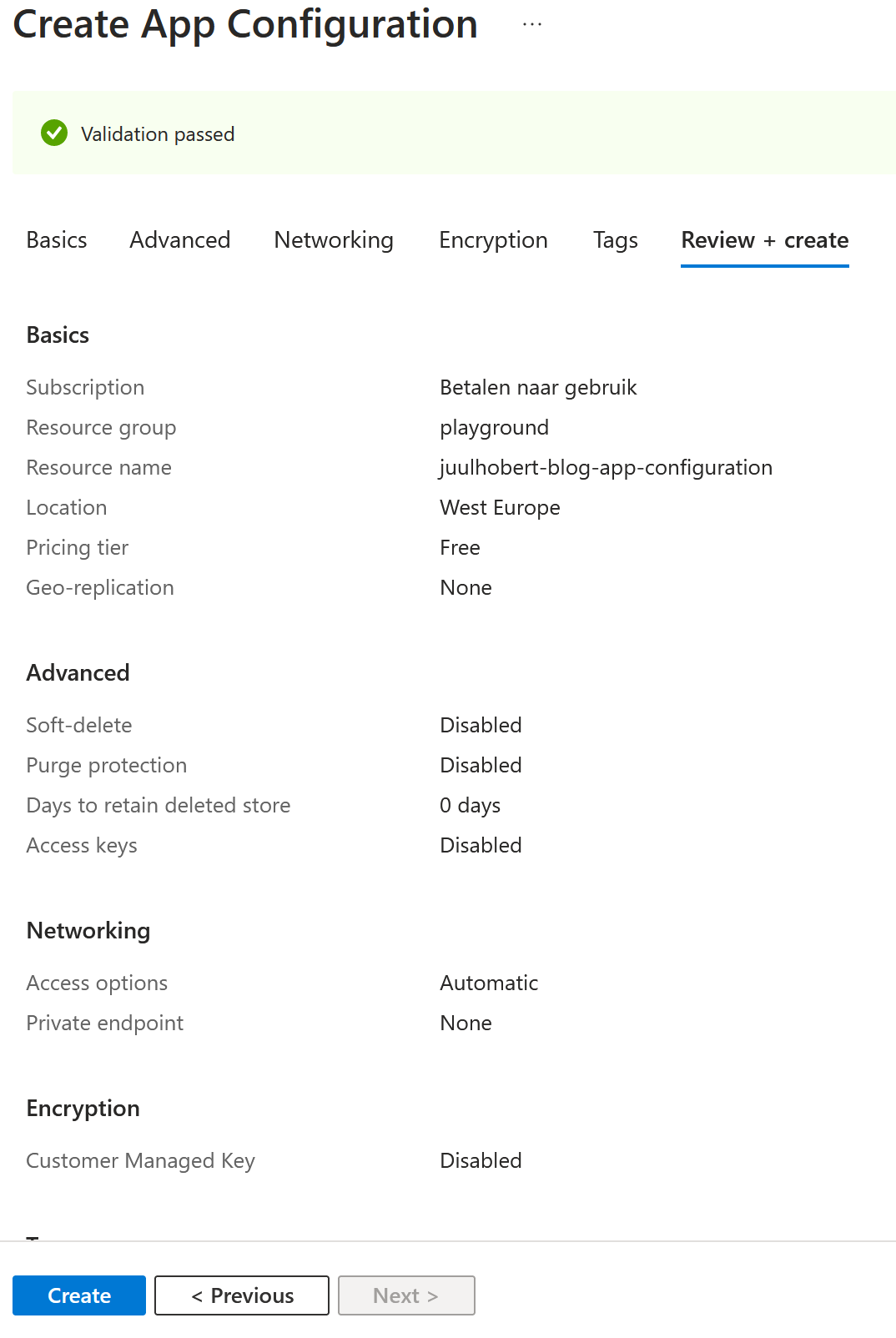

I create the app configuration in the Azure portal. I do this using the settings shown in the image. I choose the pricing tier “Free” and make the configuration accessible via the public internet. Do not use these settings for a production environment!

I assign myself the right App Configuration Data Owner. This allows me to modify the configuration and run the Azure function locally while reading the configuration. I know from experience that it can take some time before the assignment is allocated.

I modify the file local.settings.json and include the endpoint in the configuration. Additionally, I ensure that I have already logged in at least once using the command az login.

{

"IsEncrypted": false,

"Values": {

"AzureWebJobsStorage": "UseDevelopmentStorage=true",

"FUNCTIONS_WORKER_RUNTIME": "dotnet",

"AppConfigEndpoint": "https://juulhobert-blog-app-configuration.azconfig.io"

}

}

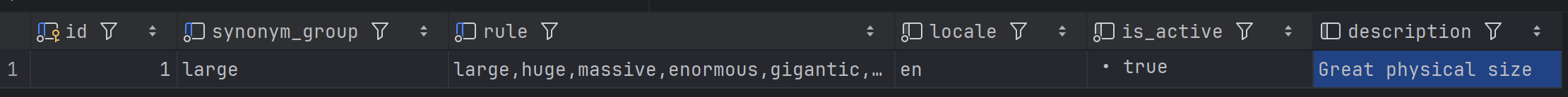

It's time to start up the application. When I open http://localhost:7071/hello-world in the browser, the result is still unchanged. This is correct, because I haven't added the feature flag yet. I add the feature flag and also set the configuration. I restart the azure function and view the page again in the browser. Hooray! I now see Hello Juul.

TimerTrigger

The simplest way to refresh the configuration, in my opinion, is by using a TimerTrigger. This is a trigger where a time schedule can be configured. The function will then be invoked at regular intervals. The code below will refresh the configuration every minute.

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Extensions.Configuration.AzureAppConfiguration;

using Microsoft.Extensions.Logging;

namespace JuulHobert.Blog.FunctionAppWithAppConfig;

public class RefreshAppConfiguration

{

private readonly IConfigurationRefresherProvider _refresherProvider;

public RefreshAppConfiguration(

IConfigurationRefresherProvider refresherProvider)

{

_refresherProvider = refresherProvider;

}

[FunctionName("RefreshAppConfiguration")]

public async Task RunAsync(

[TimerTrigger("0 * * * * *")] TimerInfo timer,

ILogger logger)

{

foreach (var refresher in _refresherProvider.Refreshers)

{

if (await refresher.TryRefreshAsync())

{

logger.LogInformation("Refreshed configuration");

}

else

{

logger.LogWarning("Failed to refresh configuration");

}

}

}

}

This way of refreshing has its pros and cons. The major advantage is that it is the least complex solution. However, the disadvantages in my opinion are:

– Refreshing takes some time; a configuration adjustment will only take effect once the TimerTrigger fires.

– There is a limit to the amount of requests that can be made. If too many requests have been made, it is temporarily not possible to receive new values. Depending on the chosen SKU, it will take an hour or a day before this limit is reset.

Event Grid

Due to the mentioned drawbacks, I prefer working with an EventGridTrigger. This is a trigger that can be set to fire when the configuration is changed. The great advantage of this is that a change in the configuration takes effect immediately.

I will modify the code to be able to use Azure Event Grid. For this, I need to add the following library to my project.

After that, I will modify my existing class RefreshAppConfiguration and make use of the new trigger.

[FunctionName("RefreshAppConfiguration")]

public async Task RunAsync(

[EventGridTrigger] EventGridEvent eventGridEvent,

ILogger logger)

{

foreach (var refresher in _refresherProvider.Refreshers)

{

if (await refresher.TryRefreshAsync())

{

logger.LogInformation("Refreshed configuration");

}

else

{

logger.LogWarning("Failed to refresh configuration");

}

}

}

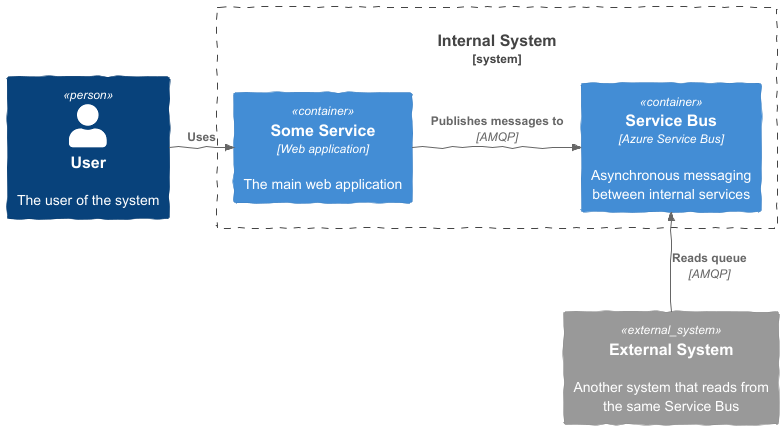

To get this working, a number of things need to be created and configured in Azure. In short, an Azure function needs to be running, an app configuration created, managed identity needs to be set up, and the environment variable AppConfigEndpoint needs to be configured. Due to the amount of actions required for this, I found it more convenient to use a Bicep script. Bicep is a language from Microsoft that allows you to define Azure resource infrastructure in a simple and readable way.

First, delete the manually created app configuration in Azure. Then clone the repository containing the Bicep script:

git clone https://github.com/juulhobert/az-function-with-app-configuration.git

Next, I adjust the values in main.bicepparam to my preferences and deploy the whole thing in Azure:

.\deploy.ps1 <resource-group-name> [subscription-id]

Now open the following URL in your browser https://<function-app-name>.azurewebsites.net/hello-world. In Azure, you can modify the configuration and you will see that the value is immediately reflected in the response.

Summary

In this article, I have shown how Azure Functions can be used together with App Configuration to centrally manage the configuration of distributed systems.

I have demonstrated two different ways to refresh app configurations. I prefer to use the method that makes use of Event Grid, as it allows changes to be applied immediately.

If you found the content of this article helpful, please show your appreciation by leaving a star on my Github project